传统的机器学习工作流程主要集中在模型训练和优化上; 最好的模型通常是通过像精度或者错误这样的性能度量来选择的,而且如果它通过了这些性能标准的某些阈值,我们倾向于假设一个模型是足够好的。在机器学习的许多应用中,用户会使用一个模型来帮助做决定, 例如:一位医生不会对病人进行手术,仅仅因为这个模型说不应该进行手术?

由于复杂的机器学习模型本质上是黑盒子,而且太复杂,机器学习模型做出的分类决定通常很难被人类的大脑所理解,但是能够理解和解释这些模型对于提高模型质量,提高信任度和透明度以及减少偏见非常重要,因为人类往往具有良好的直觉和因果推理,这些都是难以在数据评估指标中捕获。

因此,我们希望能够形象的理解它们的工作原理:为什么一个模型将具有特定标签的案例进行准确分类。eg: 为什么一个乳腺肿块样本被归类为“恶性”而不是“良性,仅仅因为它长得丑吗?

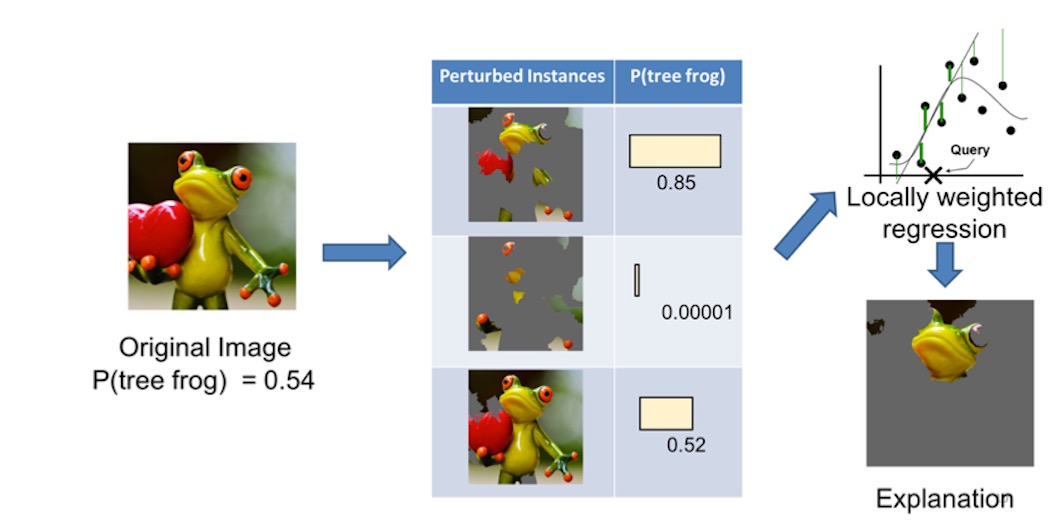

Local Interpretable Model-Agnostic Explanations (LIME) is an attempt to make these complex models at least partly understandable. The method has been published in “Why Should I Trust You?” Explaining the Predictions of Any Classifier. By Marco Tulio Ribeiro, Sameer Singh and Carlos Guestrin from the University of Washington in Seattle

How LIME works lime is able to explain all models for which we can obtain prediction probabilities (in R, that is every model that works with predict(type = “prob”)).